Oppugning Machine Learning Bias: Strategies for Fairer Models

Machine learning technology has revolutionized various industries and has the potential to greatly impact our lives. However, one of the major challenges that arise with machine learning algorithms is bias. Bias in machine learning occurs when the algorithms discriminate against certain individuals or groups based on their characteristics, such as race or gender. This can lead to unfair outcomes and perpetuate existing inequalities in society.

In order to address bias in machine learning, it is crucial to develop strategies that ensure fair and ethical AI models. These strategies involve identifying and mitigating different types of biases, improving data quality and diversity, implementing transparent and explainable algorithms, and incorporating human oversight in the decision-making process. By addressing bias in machine learning, we can strive towards developing fairer and more inclusive AI models that benefit everyone.

Types of Bias in Machine Learning

When it comes to machine learning algorithms, bias can manifest in various forms. Understanding these different types of bias is crucial for creating fair and unbiased AI models. Let’s dive into the different categories of bias that can occur in machine learning:

- Algorithmic Bias: This type of bias arises when biases are embedded directly into the algorithms themselves. These biases can lead to discriminatory outcomes, reinforcing existing inequalities and perpetuating discrimination.

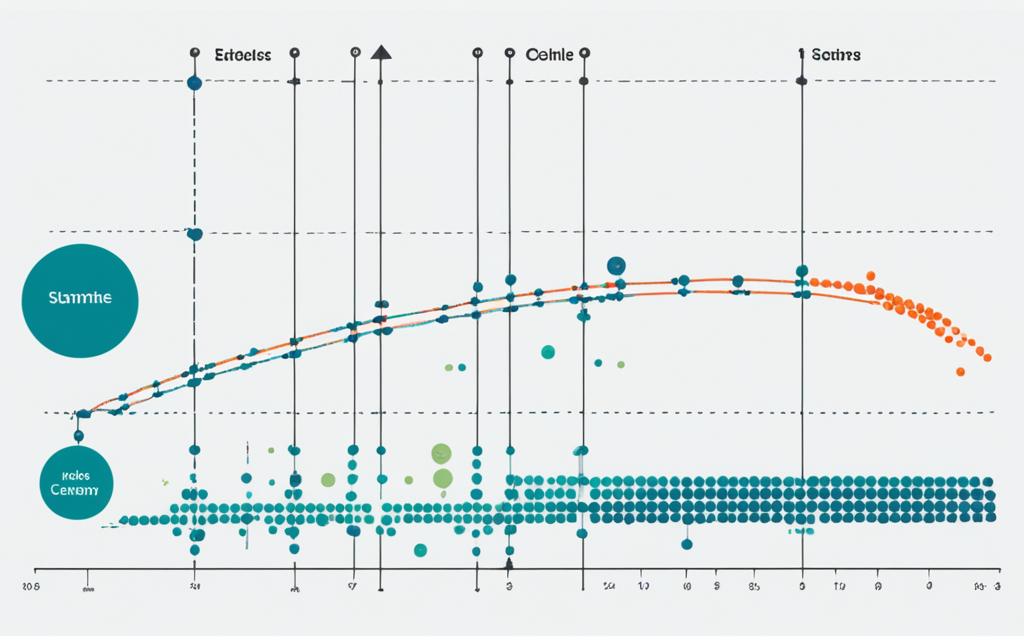

- Data Bias: Data used to train machine learning algorithms can be biased, resulting in biased decision-making. Biases in the training data can be unintentionally encoded into the algorithm, leading to biased predictions and unfair outcomes.

- Selection Bias: Selection bias occurs when the data used to train an algorithm is not representative of the population it is intended to serve. This can result in inaccurate and skewed results that do not reflect the diversity and nuances of the real-world population.

- Confirmation Bias: Confirmation bias happens when machine learning algorithms reinforce existing biases and stereotypes. This can lead to the perpetuation of discriminatory patterns and further marginalization of certain groups.

Identifying and addressing these types of bias are essential steps towards creating fairer and more inclusive machine learning models. By understanding the specific biases that can occur, we can develop strategies to mitigate their impact and ensure the ethical deployment of AI technology.

Addressing bias in machine learning requires a multifaceted approach that encompasses diverse data collection, transparent algorithms, and human oversight. In the next section, we will explore strategies to effectively tackle bias and promote fairness in machine learning models.

Strategies to Address Bias in Machine Learning

Addressing bias in machine learning requires the implementation of various strategies that mitigate bias, improve data quality, and provide transparency and human oversight. One crucial approach is to thoroughly analyze the training data to identify any biases and take proactive steps to remove or reduce them. This involves careful examination of the data to determine if it is diverse and representative of the population it serves.

In order to improve data quality, it may be necessary to collect additional data that better represents different demographic groups. This helps to ensure that the algorithm is exposed to a wider range of experiences and contexts, mitigating bias. Data augmentation techniques, such as oversampling underrepresented groups or generating synthetic data, can also be utilized to enhance the diversity of the training data and minimize bias.

In addition, employing explainable algorithms is crucial to understanding and addressing bias in machine learning. By using algorithms that provide transparent explanations for their decisions, researchers and stakeholders can gain insights into how bias may be influencing the model’s outcomes. This transparency enables the detection and mitigation of biased patterns and contributes to a fairer AI system.

Lastly, human oversight plays a vital role in ensuring fairness in machine learning models. By involving human experts who can review and interpret the algorithm’s outputs, biases and discriminatory patterns can be identified and rectified. Human oversight also provides an opportunity to challenge and question the decisions made by the model, reducing the risk of unjust outcomes.

FAQ

What is bias in machine learning?

Bias in machine learning refers to discriminatory outcomes that occur when algorithms discriminate against certain individuals or groups based on their characteristics, such as race or gender.

What are the different types of bias in machine learning?

There are several types of bias in machine learning, including algorithmic bias, data bias, selection bias, and confirmation bias.

How can bias in machine learning be addressed?

Bias in machine learning can be addressed by strategies such as identifying and mitigating bias in training data, improving data quality and diversity, implementing transparent and explainable algorithms, and incorporating human oversight in the decision-making process.